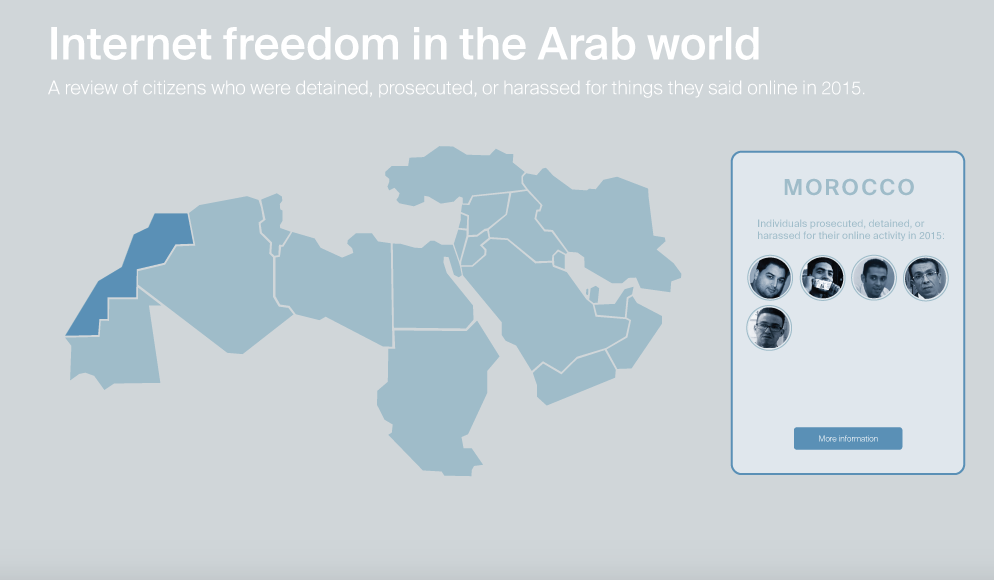

For my final ICM project, I created an interactive map tracking individuals in the Arab world who had been detained, prosecuted, or harassed by their governments in 2015 because of their online activity.

Data sources: Committee to Protect Journalists’ 2015 report on jailed journalists | Global Voices’ Digital Citizen project

When I had initially proposed this project, I planned to limit the scope of my data to just imprisoned journalists. As I did more research, however, I decided to broaden the scope to include activists, outspoken citizens, bloggers, and others who are receiving lengthy prison sentences for expressing themselves online. In many cases, individuals are being arbitrarily detained without any clear accusations or charges.

THE DATA

The data for this project came from several sources. First, I combed through the data collected by the Committee to Protect Journalists in its 2015 report on jailed journalists. Second, I consulted Global Voices’ outstanding project Digital Citizen, a biweekly review of human rights in the Arab world. For every individual, I found at least one other piece of journalism online confirming the incident. The result was a long list of individuals who had been detained, prosecuted, physically harassed, or killed by their government between December 2014 – December 2015.

OVERALL TRENDS

Here’s what is most troubling: The number of individuals being targeted for their online behavior in the Arab world is increasing. According to Freedom House’s 2015 Freedom on the Net Report, in the past year there was a spike in public floggings of liberal bloggers, life sentences for online critics, and beheadings of internet-based journalists in the Middle East.

The report states that in the past year “penalties for online expression reached a new level of severity as both authorities and criminal groups made public examples of internet users who opposed their agenda.” In Egypt, for instance, two journalists received life sentences for their online coverage of a violent government crackdown on a Muslim Brotherhood protest.

THE LEGAL CLIMATE

With this project, I wanted to explore the factors driving the boost in imprisonments and detainments for online behavior. Specifically, I was interested in how the legal climate and attitudes towards the internet in each of these countries contributes to the problem.

The adoption of sweeping cybersecurity and anti-terrorism laws in 2015 has been cited as one of the major causes of increased imprisonments. This year, Mauritania proposed two draft laws on cybercrime and the information economy that punish “insults” against the government with up to seven years in jail. Tunisia passed a counter-terrorism law that arbitrarily restricts freedom of expression. A new freedom of information act was passed in Sudan that legalizes government censorship. This year Egypt passed a number of cybercrime and anti-terrorism laws that criminalize broad online offenses, allowing the government to crack down on human rights activists. The Jordanian government broadened its legal definition of “terrorism” to include critics who “disturb its relations with a foreign state.” Kuwait adopted a controversial anti-terrorism law. Other countries in the region continue to enforce their cybercrime and anti-terror laws, including the U.A.E., which has been know to give the death penalty for defamation charges.

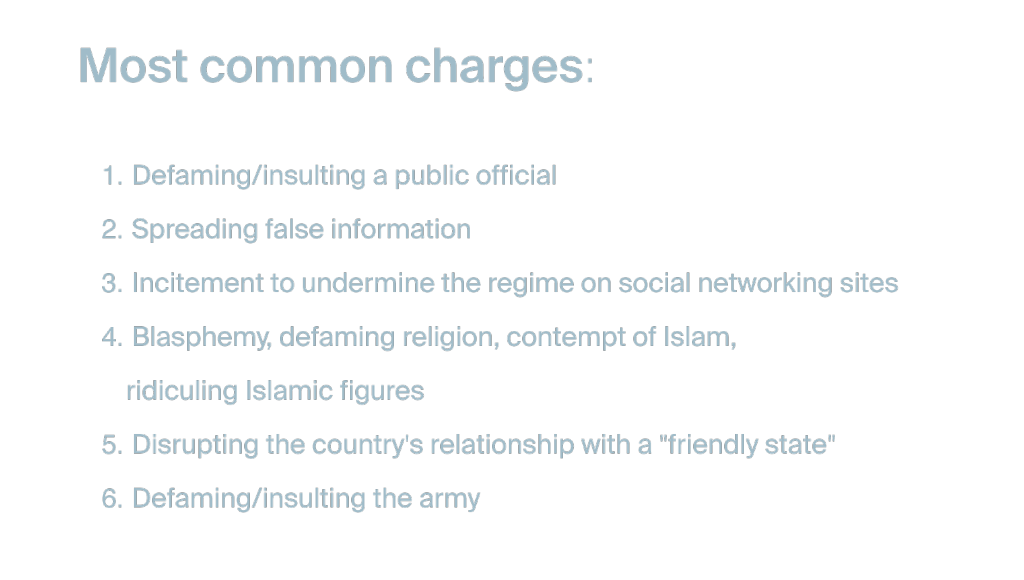

A quick look at the data suggests that these were the charges most often brought against individuals:

I plan to continue investigating this issue in order to better understand why there has been an uptick in human rights abuses against journalists/internet users/bloggers/activists.

A WORD ABOUT THE DATA

*A major limitation of this data set: It is impossible to have a complete picture of human rights abuses right now. We do not yet have access to information about every detained or imprisoned citizen in the Middle East. For countries in which there is no rule of law (i.e. Libya and Syria), access to information about killings and detainments is limited.

I will continue to add additional individuals to the map as the media continues to report on human rights abuses that occurred in the past year.

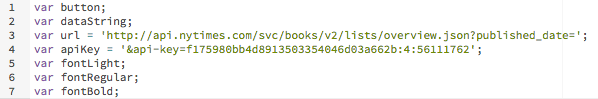

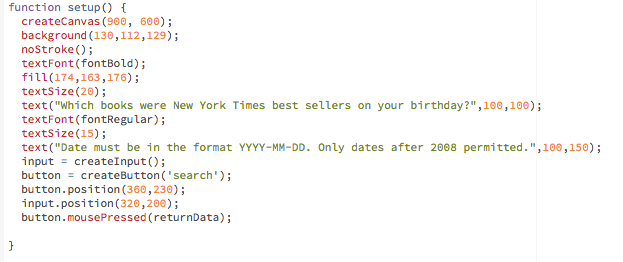

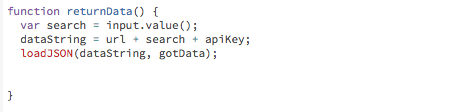

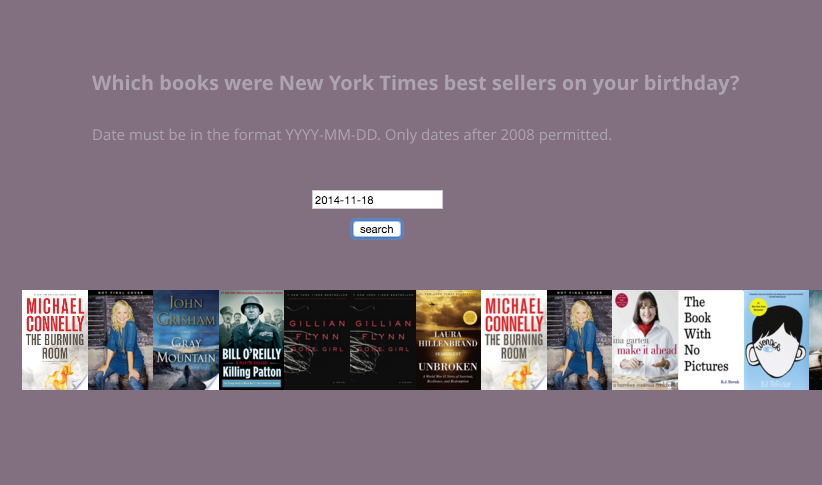

That’s it! I got the search working. There were several lingering issues with the sketch that I didn’t have enough time to resolve, namely:

That’s it! I got the search working. There were several lingering issues with the sketch that I didn’t have enough time to resolve, namely: