I’ve been thinking more about what I am going to be titling this body of work as well as an upcoming workshop I will be hosting at the MAL next week. I’ve titled the work Knitting with machines: Imagining softer futures through ‘string figures.’

The term “string figures” is one of the SF practices (science fiction, speculative fabulation, speculative feminism, etc) introduced by feminist STS scholar Donna Haraway in her book Staying with the Trouble. These practices enable us to fabricate futures that connect us to our shared communities in the face of a challenging present. Like knitting, string figures are loops, but they are also ways of weaving stories together. In my work, I am approaching knitting as both metaphor and material for exploring futures that promote connection, community, and embodied knowledge(s).

This past week, I’ve been reading Pat Treusch’s book Robotic Knitting: Re-Crafting Human-Robot Collaboration Through Careful Coboting, in which she details her attempts to teach a robot how to knit. I have been soaking up some of the language she uses to describe her approach to robotic knitting. She describes the process of robotic knitting as a ”methodological tool and analytical frame for contemporary technofeminism” (Treusch 10). Technofeminism, according to Judy Wajcman, enables new forms of inquiry that push us towards a more just and equitable world.

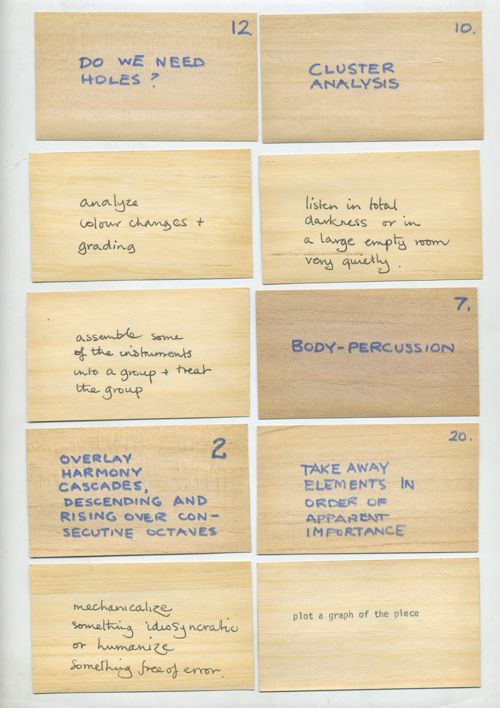

Robotic knitting, Treusch says, is an “interventionist practice” and “generative, playful engagement” (10) with an open situation, resulting in diverse forms of knowledge. I have been thinking about my own experimentation with knitting and yarn as a playful approach to untangling meaning and materiality. I’ve written before on this blog about how my approach to art-making is sometimes scattered or emergent, but also intensely relational.

“Playing with yarn can be considered a practice of producing new stories.”

Pat Treusch

Like Donna Haraway untangling a ball of yarn during an interview, I think that in my work I attempt to create meaning through a process of unwinding, untangling, pulling and stretching, knitting and unraveling, with often surprising outcomes. Yarn is both metaphor and material (referencing a line from Sadie Plant’s book Zeros + Ones).

Donna Haraway describes this kind of knowledge-production as “situated knowledge”: the idea that specific assemblages of bodies, time, space, material, and power relations produce different, site-specific forms of knowledge. We get only a partial view of that system, but the partial/local view tells us more than a universal view.

“It matters what matters we use to think other matters with; it matters what stories we tell to tell other stories with; it matters what knots knot knots, what thoughts think thoughts, what ties tie ties. It matters what stories make worlds, what worlds make stories.”

Donna Haraway, Staying with the Trouble

I will continue pulling these intellectual threads (ha!) through my work, especially as I look towards designing a workshop that invites new modes of inquiry and research.

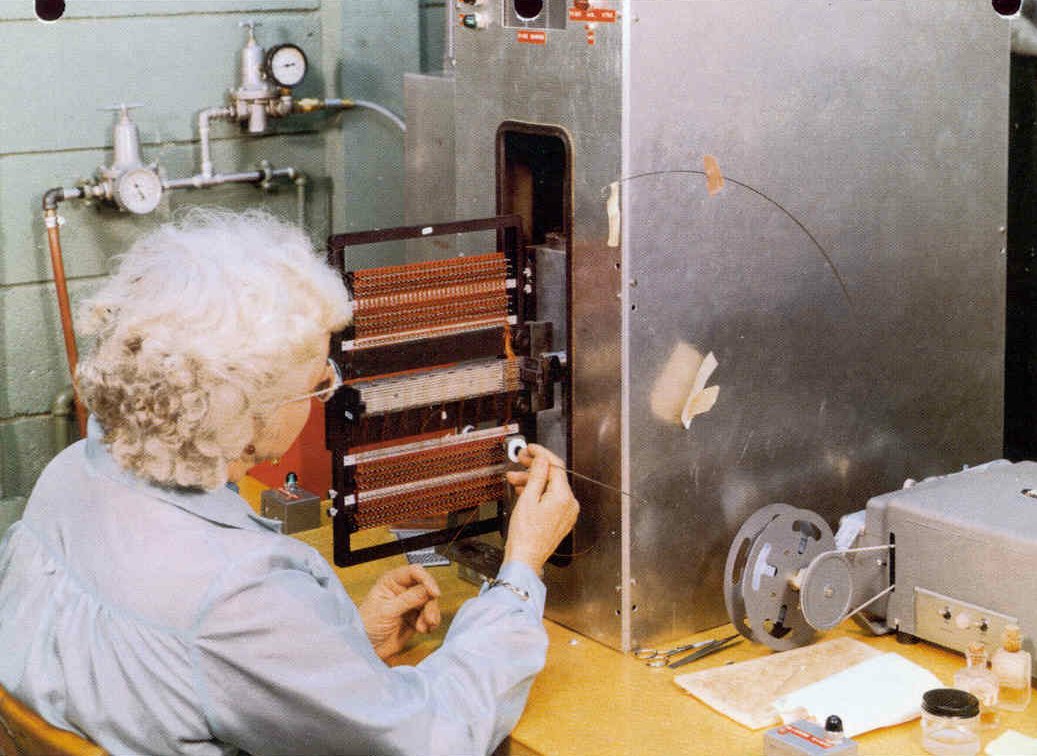

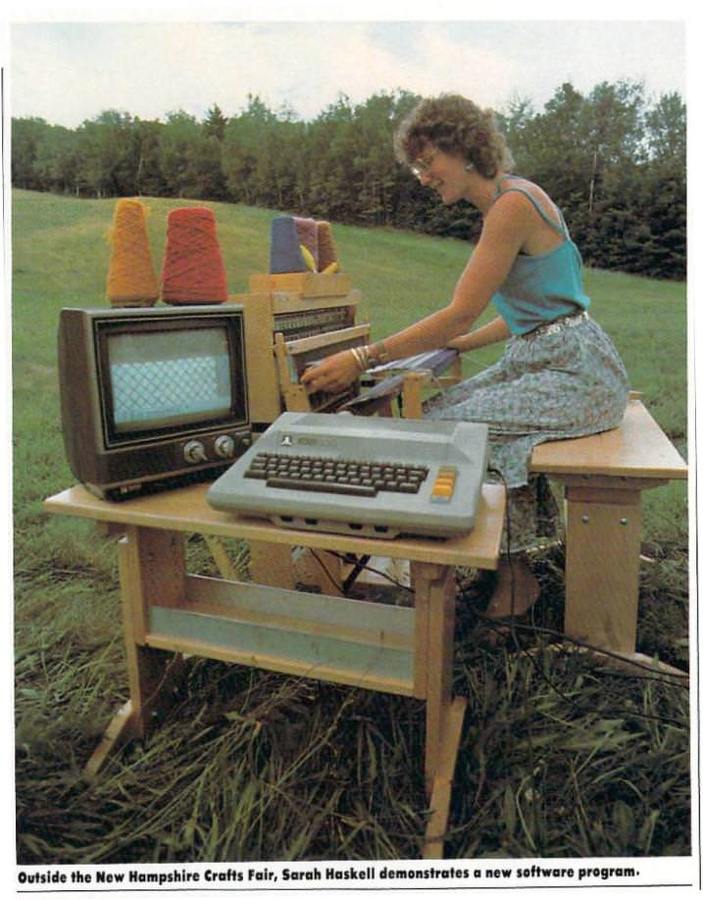

On Friday, I had the opportunity to visit the Unstable Design Lab at ATLAS, led by Laura Devendorf. It was really inspiring to see how the group is exploring cutting-edge weaving techniques, including building new software tools for sketching 3D parametric surfaces and experimenting with thermochromic materials that change color when heat is applied. When I talked about my project, Laura mentioned that the old patents for domestic knitting machines are quite revealing as artifacts of the past: Apparently, they use gendered language that reflects how the patent writer anticipated such machines might be used by women in the home. I am really interested in digging more into those patents this week.

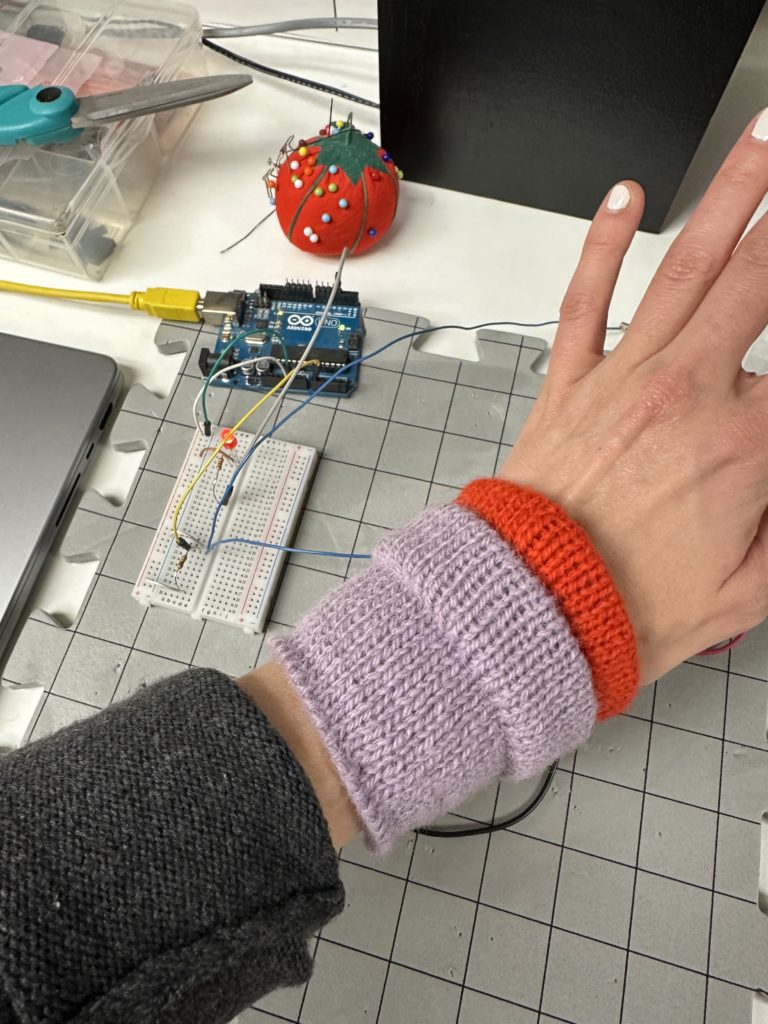

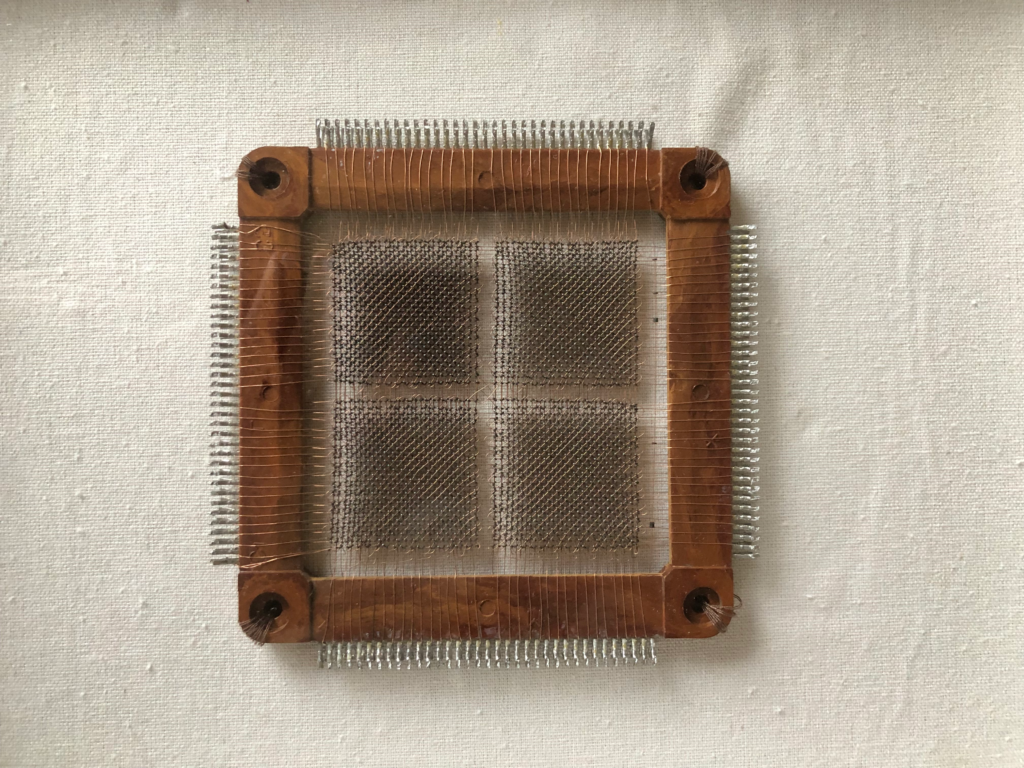

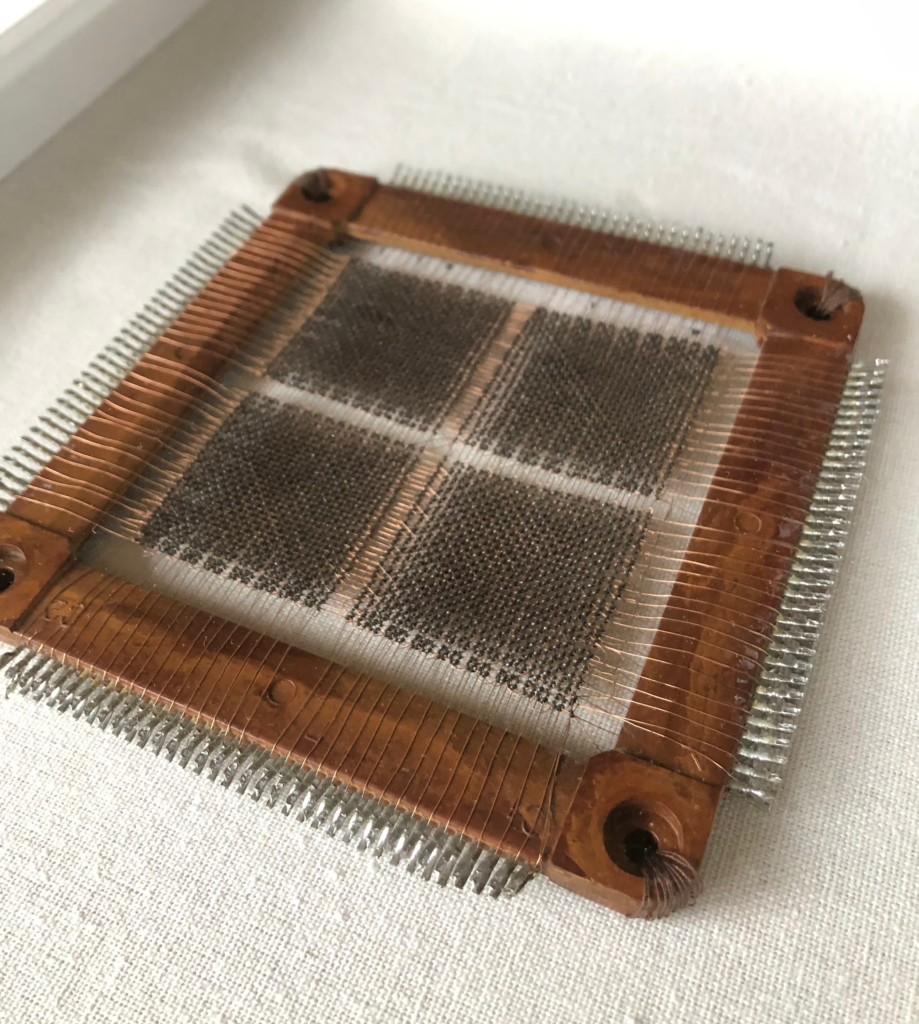

Over the past few days, I’ve been pushing myself to experiment more with 3D knitted structures, using a technique called hand-manipulated stitches on the knitting machine. I knit a few different versions of a zig zag stitch, as shown in the previous post. Next, I experimented with combining weaving with the zig zag stitch, weaving a spool of wire into the knit.

I also learned how to do a tuck stitch, which produces a thicker, more durable fabric to work with overall. I love how the two yarns look next to each other in the tuck stitch.

I also learned how to use the hold setting of the knitting machine to knit short rows. I learned how to knit a rounded ladder, and then knit a few different pouches using this technique. As I have been experimenting with different kinds of sensors, I am thinking about how to weave, hide, or store various electronic components within the garment so that they are hidden from view. This technique was really helpful for thinking about how to create small pockets.

Next, I want to experiment with a few more sensors and feedback, including the touch sensor and haptic motors.

References (in the order referenced)

Donna Haraway, Staying with the Trouble: Making Kin in the Chthulucene

Pat Treusch, Robotic Knitting: Re-Crafting Human-Robot Collaboration Through Careful Coboting

Judy Wajcman, TechnoFeminism

Sadie Plant, Zeros + Ones

Donna Haraway, “Situated Knowledges: The Science Question in Feminism and the Privilege of Partial Perspective”